My leadership coach once said. Nobody can promote you to a leader, you have to do it yourself. This is how I managed to promote myself to Tech Lead.

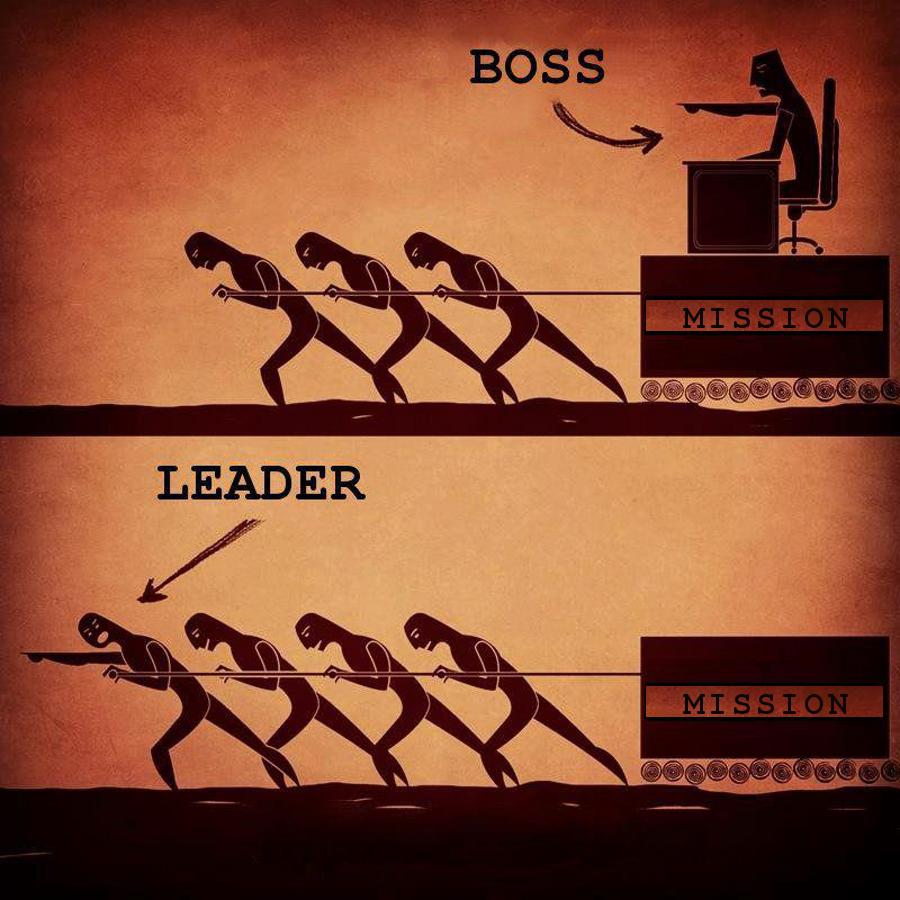

Before getting into the story, I want to remind you of the difference between a leader and a boss. A boss is someone in charge because of hierarchy. Maybe he has been working for the company longer than you or he is the brother of the owner. The most important thing to remember is that this title has been given to him by someone else. A Leader is someone in charge because people follow him. read the previous sentence again so you can deeply appreciate what it means. Nobody can promote you to be a leader. Even if they promote you to a boss, you are not a leader if people don’t follow you.

Probably you were already aware of the difference from leader to boss before reading the previous paragraph. What most people don’t realize is what I’ve said in the introduction to this post. Nobody can promote you to a leader. If you want to be the leader of your group, you have to move into the front line and show the path to your team. If you do it right, people will start to follow you. And since the very first moment that someone trusts you to lead the way, you became a leader.

My experience

Joining the team

Shortly after joining Tecnalia, I was involved in a big project aiming to find a way to apply versions to large image datasets. I was hired as a developer, so my first task was to study the current project status. The first step was to read through the client requirements. It can be summarised as:

- Versioning large datasets.

- Provide a search mechanism for existing datasets.

- Support project creation based on datasets.

The development was supported by 4 developers. (1 frontend, 2 big data, 1 DevOps). I was the 5th developer, but my role wasn’t defined. I started talking with the DevOps expert to understand how the system was being developed and deployed. At this point, I realised that the development team was fragmented. The DevOps expert was working on the projects support requirement and the dependencies on the dataset versioning were communicated by an OpenAPI spec. So two different java services appeared from team isolation. Also, the CI/CD pipeline was weak. At this point, the application was deployed using vagrant to create ubuntu machines that then ran poorly designed docker images. Also, how to share and version the images wasn’t defined.

To increase the fun, the vagrant system was deployed on a Windows machine using a lot of custom scripting. My first attempt to instal vagrant on Linux and replicate the environment failed catastrophically. I started to dig around .bat files and trying to rewrite them as .sh. It wasn’t hard, but I quickly found that there were missing dependencies. After a week or so, I didn’t manage to start the vagrant system, but I gained enough insight into how the system was designed to write an equivalent docker-compose.yml file. In the end, we just had a few containers that needed to be connected. This file was an incredible success and when I communicated this to the rest of the team, they quickly followed my track so they could start the environment on their machines.

Happy with my progress as a DevOps engineer, I attempted to understand the java code. It was java 8 running JaxRS generated from the swagger definition. I struggle to set up VSCode to work with java8 properly and I finally installed IntelliJ IDEA. I don’t know why, but the code looked really complex although it was only acting as a GitLab API simplification layer. At this point, I realised that there was a huge dependency with the dataset versioning layer and it wasn’t working as I expected.

Questioning current decisions

I started to ask questions about how the dataset versioning worked. The plan was to build a SQL database to store references for every file uploaded. Then, these files must be uploaded to an arbitrary storage site. At that moment, only HDFS was supported but there were plans to support cloud providers. I quickly discovered that they were also using java 8, but this time with Spring Boot and the swagger code was generated from code annotations. Also, there were using a Eureka service discovery architecture that was designed to automatically discover storage providers.

Around that time, a workmate not involved in this project suggested using DVC to track the different files. It provided functionality to track files directly on git repositories and the possibility to upload them to arbitrary remotes. The big data team started to use DVC to track files as a POC doing calls shell calls to the DVC executable and parsing the stdout. I was annoyed by this approach because it made the application run really slow and it was really easy to create inputs that made the system fail. My brain was thinking, why don’t just use DVC? It meets the 3 main requirements.

Testing

I thought that we could easily adapt the DVC code to meet our specific requirements. So I started to read DVC documentation and codebase to gain a better insight into how it worked. The main reason not to simply switch over was that the team didn’t want to throw away all the development. It was a fair point, but there was a catch. There was no unit test for the entire platform and only a few integration tests have been written for a small portion of the application. I knew that this was going to be a problem, but it was hard to prove my point.

I’ve already been two months understanding the project and now I could say that I understood the application requirements. This enabled me to write e2e tests. The management wasn’t aware of the benefits of writing automated test suites. So I asked for permission to write an e2e test suit to ensure the application quality. After a few days, I created a simple Go script that ran all the basic e2e test that I could come up with based on the current API specification. And you can guess what happened then. After a few weeks of back and forth iterations with the development team due to found bugs. We managed to stabilise the test suite and the application. Suddenly, the development team started to notice how they broke things that they were unaware of and they gained confidence in the test suite. I slowly extended the test coverage, knowing the application allowed me to write tests that I knew will find bugs. It wasn’t too hard because the code wasn’t well structured and there were no other kinds of testing.

This allowed our team to reach the next milestone being confident with the functionality of the application. Although there was a lot of known bugs and limitations. The happy path was working and automatically tested after each commit. After the milestone, I had a discussion with the team explaining why we couldn’t continue the development this way.

- The team was fragmented, 3 different services created not because they were needed, but because developers worked in different codebases.

- Complexity was exponential, There was a huge variety of technologies that were overkill for the situation.

- It was impossible to write unit tests. The code wasn’t well structured.

- Calling DVC from the shell is a bad idea, we should either reimplement the functionality in java 8 or switch to python.

Promotion

The priority was to work as a team, so this was the point where I became a Tech Lead. I started by creating a board with all the individual development tasks that will allow us to track what needs to be done. There was a previous board, but it was oriented as functional requirements from the client. We needed one board for development tasks. We didn’t need to have different services, so we agreed on using Spring Boot server with code generation from an OpenAPI specification. We created a beautiful monolith. Now we were working on the same git repository and I suggested following the git-flow pattern to organize our work. This included code reviews that were missing before. The team complained about the reviews because they felt that it was a waste of time. So leading by example, I did all the reviews myself at that point. I locked the master and the development branch and I was the only one allowed to merge anything. The first reviews were hard. but it became easier as I understood the code. Quickly I was able to detect functionality duplications that the developers weren’t aware of because they didn’t talk to each other. Some complex reviews quickly required me to enter in a call to resolve the issues. This is how we started to do pair programming sessions. Although I wasn’t a java expert, I quickly learned from my more experienced team and I could easily share the knowledge between them.

A drawback was that the time was filing by and we were not delivering new features. We were just learning to work as a team and refactoring the existent functionalities. To prove that we were working, I had the idea to set up SonarQube integration. It almost exploded with the number of warnings at the beginning. I shared it with my team and they quickly started to fix issues and more importantly. They didn’t create new ones! The graphs generated by SonarQube allowed me to justify our work.

This took us all the summer. Almost 3 months. But I have to say that developers were happier than ever. Although they’ve suffered a lot learning to work as a team and rewriting most of the codebase. It was clear that the application was working much better and now it was possible to keep extending with more confidence. We stopped the snowball rolling down the hill.

Most of the functionality was applying simple business logic over the GitLab API. Also, doing a unit test in java is hard… This made it hard for me to convince my team to invest time in writing them. They were happy with the e2e tests. Moreover, I couldn’t write simple unit tests to show them how to do it. Again, java unit testing can be hard. There were no interfaces in the code so applying dependency injection wasn’t possible. And rewriting everything again was going to take a lot of time. But then, a new opportunity appeared. We addressed the problem of calling DVC from the shell by reimplementing the functionality we needed in java. This was a TDD manual example! I took the initiative and wrote the first DVC class implementation with proper unit testing. The team was a little bit sceptical yet, but I quickly show them how these tests enabled me to test a lot of different workflows and validate them within milliseconds. So they started to follow my example. To keep the motivation, I researched how to add test coverage reports to SonarQube so we could see our improvement over time. This allowed the team to deliver new functionality at a steady rate with acceptable quality and without breaking previous functionality.

During the DVC reimplementation, we detected a need to implement a custom remote compatible with DVC HTTP remote interface. It was a really simple requirement, but it must be able to upload/download files of arbitrary size. Also, it was a really good candidate to be the bottleneck of the system. Before adding this to our new monolith, I did a small POC with go. Turns out that implementing streaming services with go is much easier than java. Also, the go-cloud/blob project did most of the heavy lifting. After a few refactors, it quickly became a cornerstone for the entire system. Being a separate service allows to scale it independently which is great to handle high volumes of upload/download. Being written in go makes it simple and easy to deploy!

The project continued for a few months more. Due to internal changes, 2 people left the team and another 2 joined. Thanks to the previous efforts to improve code quality, the ramp-up was much easier and the new joiners were able to produce code in less than a month.

Along with these responsibilities, I was having weekly meetings with the client to report progress and ensure the project was moving in the right direction. I also managed the task board to ensure it was updated.

Conclusion

One way or another, I always knew that I wanted to be a leader. Helping others to grow is one of the most satisfactory things I’ve ever done. At high school and University I always wanted to become a teacher. But that was discarded from the options when I realised that most of the regulated education is tightly regulated and you are forced to repeat over and over the same outdated concepts. More or less, at the same time, I develop a taste to organize workshops. It was much more interesting and people seem really engaged. When I started my professional career, I discovered the development teams and here is where I realised that my ideal future position could be leading development teams. Nevertheless, I think I still need more professional experience to be able to properly lead teams. That is one of the main reasons I decided to leave Tecnalia and join Form3. I need development experience.